Hide / show the code

1 / 3[1] 0.3333333Burak Demirtas

April 2, 2023

One of my friends asked help on some R code today. She was running a correlation test and even the data is different, p-value was coming exactly the same number. So she assumed she was doing something wrong.

I checked the code, it was just fine and I saw the same thing: 2.2e-16 was the value popping up even we use different data.

I suspected that since it’s a very small number and R insisting on that number, it should be some sort of limit. When I do some quick search, bam! I came up with the term called “Machine Epsilon”.👾🤖

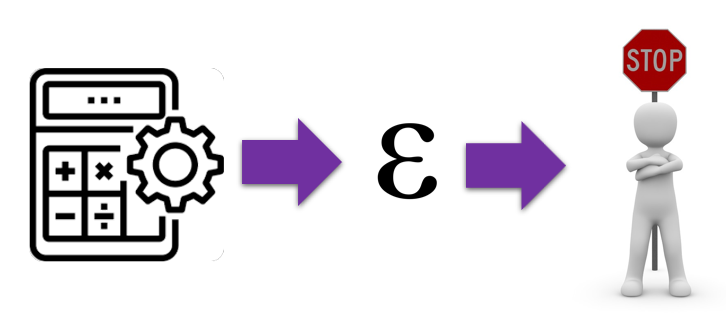

Before the term itself, let’s think that computer made a simple division:

Are these 3s really 7 times after 0? Or is it actually going to infinite number of 3s? We know there should be infinite number of 3s there even if we see just 7 here. Which means, normally, computer should have run this simple code forever right? But it didn’t. It stopped at some point and gave me the result. Why did it stop? Why your old school calculator or calculator on your phone stops?

Because, computers cannot run forever , they should stop at some point and generate a result. Their resources and their memory is also finite. So, they HAVE TO stop at one point.

The point where your computer stops generating any more digits after zero is called Machine Epsilon. It’s also the smallest value you can use for any mathematical operation. (epsilon letter usually refers to limit)

The term was first introduced by James H. Wilkinson in his 1963 paper “Error Analysis of Direct Methods of Matrix Inversion”. In this paper, Wilkinson defined machine epsilon as “the smallest number which, when added to 1.0, yields a result distinct from 1.0”.

This definition is still valid: machine epsilon is the smallest number that can be added to 1.0 and produce a different result in the computer’s floating-point arithmetic.

Then, what’s happening to the values below this number? They simply get this value!

That’s why my friend was seeing the same p-value over and over even if she changes the data. She was getting a very low p-value, even lower than 0.00000000000000022. (2.2e-16) Since this is the machine epsilon of R, it was quite what we should have expected.

Just type in the console:

Do you want to see the full list? Go ahead without the $ sign:

$double.eps

[1] 2.220446e-16

$double.neg.eps

[1] 1.110223e-16

$double.xmin

[1] 2.225074e-308

$double.xmax

[1] 1.797693e+308

$double.base

[1] 2

$double.digits

[1] 53

$double.rounding

[1] 5

$double.guard

[1] 0

$double.ulp.digits

[1] -52

$double.neg.ulp.digits

[1] -53

$double.exponent

[1] 11

$double.min.exp

[1] -1022

$double.max.exp

[1] 1024

$integer.max

[1] 2147483647

$sizeof.long

[1] 4

$sizeof.longlong

[1] 8

$sizeof.longdouble

[1] 16

$sizeof.pointer

[1] 8

$longdouble.eps

[1] 1.084202e-19

$longdouble.neg.eps

[1] 5.421011e-20

$longdouble.digits

[1] 64

$longdouble.rounding

[1] 5

$longdouble.guard

[1] 0

$longdouble.ulp.digits

[1] -63

$longdouble.neg.ulp.digits

[1] -64

$longdouble.exponent

[1] 15

$longdouble.min.exp

[1] -16382

$longdouble.max.exp

[1] 16384So, another new term for me which was discovered 60 years ago. 🤓